The issue isn’t actually a syntax error, the Hive ParseException is just caused by a reserved keyword in Hive (in this case, end).

The solution: use backticks around the offending column name:

CREATE EXTERNAL TABLE moveProjects (cid string, `end` string, category string)

STORED BY 'org.apache.hadoop.hive.dynamodb.DynamoDBStorageHandler'

TBLPROPERTIES ("dynamodb.table.name" = "Projects",

"dynamodb.column.mapping" = "cid:cid,end:end,category:category");

With the added backticks around end, the query works as expected.

Reserved words in Amazon Hive (as of February 2013):

IF, HAVING, WHERE, SELECT, UNIQUEJOIN, JOIN, ON, TRANSFORM, MAP, REDUCE, TABLESAMPLE, CAST, FUNCTION, EXTENDED, CASE, WHEN, THEN, ELSE, END, DATABASE, CROSS

Source: This Hive ticket from the Facebook Phabricator tracker

select cast(10000 as int),

case data_type

when 'TIMESTAMP' then

concat(' ,cast((cast(sys_extract_utc(update_time) AS DATE) - TO_DATE('01-01-1970 00:00:00', 'DD-MM-YYYY HH24:MI:SS')) * 86400 AS INT) last_date_time_ts')

else

concat(' ,#unknown data_type : ', data_type)

end

from (select row_number() over() ln, table_name, column_name, data_type, column_id

from test.all_tab_columns

where date_key in (select max(date_key) from test.all_tab_columns)

and table_name = 'test_table'

) data

Tried to extract the the last updated time using below query. hive is throwing exception

Error: Error while compiling statement: FAILED: ParseException line 4:7 cannot recognize input near »cast((cast(sys_extract_utc(update_time) AS DATE) — TO_DATE(» ’01’ ‘-‘ in expression specification (state=42000,code=40000)

У меня есть запрос ниже

set hive.cli.print.header=true;

set hive.query.max.partition=1000;

set hive.mapred.mode=unstrict;

SELECT

dim_lookup("accounts",name,"account_id") = '28016' as company,

dim_lookup("campaigns",name,"campaign_id") in (117649,112311,112319,112313,107799,110743,112559,112557,105191,105231,107377,108675,106587,107325,110671,107329,107181,106565,105123,106569,106579,110835,105127,105243,107185,105211,105215) as campaign_name,

case when is_click_through=0 then "PV" else "PC" end as conv_type,

(SELECT COUNT(1) FROM impressions WHERE ad_info[2] in (117649,112311,112319,112313,107799,110743,112559,112557,105191,105231,107377,108675,106587,107325,110671,107329,107181,106565,105123,106569,106579,110835,105127,105243,107185,105211,105215)) AS impressions

FROM actions

WHERE

data_date>='20170101'

AND data_date<='20171231'

AND conversion_action_id in (20769223,20769214,20769219,20764929,20764932,20764935,20769215,20769216,20764919,20769218,20769217,20769220,20769222)

GROUP BY conv_type

Когда я его выполню, я получаю сообщение об ошибке

ERROR ql.Driver: FAILED: ParseException line 8:1 cannot recognize input near 'SELECT' 'COUNT' '(' in expression specification

Я пытаюсь получить каждое количество показов для указанного преобразования_action_id. Какая может быть ошибка в моем запросе? Спасибо за помощь.

FYI: ad_info [2] и campaign_id одинаковы.

Проблема совершенно ясна, у вас есть подзапрос внутри вашего SELECT.

Это не так.

К сожалению, точное решение не так понятно, поскольку я не совсем уверен, чего вы хотите, но вот несколько общих советов:

- Напишите свой подзапрос, протестируйте его и убедитесь, что он в порядке

- Вместо того, чтобы помещать его в вашу часть SELECT, поместите ее в свою часть FROM и (как всегда) SELECt из FROM

Просто подумайте о том, что ваш результат в подзапросе как другая таблица, которая может использоваться в инструкции from, и которая должна быть объединена (JOIN, UNION?) С другими таблицами в инструкции from.

Code of Conduct

- I agree to follow this project’s Code of Conduct

Search before asking

- I have searched in the issues and found no similar issues.

Describe the bug

I use the hive jdbc dialect plugin like this:https://kyuubi.readthedocs.io/en/latest/extensions/engines/spark/jdbc-dialect.html

But I’m running into some issues in my spark program.

I build kyuubi-extension-spark-jdbc-dialect_2.12-1.7.0-SNAPSHOT.jar from branch ‘master’, put it into $SPARK_HOEM/jars.

show my code:

import org.apache.spark.SparkConf; import org.apache.spark.sql.Dataset; import org.apache.spark.sql.Row; import org.apache.spark.sql.SaveMode; import org.apache.spark.sql.SparkSession; import org.slf4j.Logger; import org.slf4j.LoggerFactory; public class HiveDemo { private static final Logger log = LoggerFactory.getLogger(HiveDemo.class); public static void main(String[] args) { SparkConf sparkConf = new SparkConf(); sparkConf.set("spark.sql.extensions", "org.apache.spark.sql.dialect.KyuubiSparkJdbcDialectExtension"); SparkSession sparkSession = SparkSession.builder() .config(sparkConf) .enableHiveSupport() .getOrCreate(); boolean flag = true; try { Dataset<Row> rowDataset = sparkSession.read() .format("jdbc") .option("url", "jdbc:hive2://10.58.11.24:10000/ibond") .option("dbtable", "a1000w_30d") .option("user", "tdops") .option("password", "None") .option("driver", "org.apache.hive.jdbc.HiveDriver") .load(); rowDataset.show(); rowDataset.limit(20) .write() .format("jdbc") .mode(SaveMode.Overwrite) .option("driver", "org.apache.hive.jdbc.HiveDriver") .option("url", "jdbc:hive2://10.58.11.24:10000/ibond") .option("dbtable", "mgy_test_1") .option("user", "tdops") .option("password", "None") .save(); log.info("Hive Demo Success."); } catch (Exception ex) { ex.printStackTrace(); flag = false; throw new RuntimeException("Hive Demo Failed.", ex); } finally { sparkSession.stop(); System.exit(flag ? 0 : -1); } } }

console output:

org.apache.hive.service.cli.HiveSQLException: Error while compiling statement: FAILED: ParseException line 1:37 cannot recognize input near '.' 'id1' 'BIGINT' in column type

at org.apache.hive.jdbc.Utils.verifySuccess(Utils.java:267)

at org.apache.hive.jdbc.Utils.verifySuccessWithInfo(Utils.java:253)

at org.apache.hive.jdbc.HiveStatement.runAsyncOnServer(HiveStatement.java:313)

at org.apache.hive.jdbc.HiveStatement.execute(HiveStatement.java:253)

at org.apache.hive.jdbc.HiveStatement.executeUpdate(HiveStatement.java:490)

at org.apache.spark.sql.execution.datasources.jdbc.JdbcUtils$.executeStatement(JdbcUtils.scala:993)

at org.apache.spark.sql.execution.datasources.jdbc.JdbcUtils$.createTable(JdbcUtils.scala:878)

at org.apache.spark.sql.execution.datasources.jdbc.JdbcRelationProvider.createRelation(JdbcRelationProvider.scala:81)

at org.apache.spark.sql.execution.datasources.SaveIntoDataSourceCommand.run(SaveIntoDataSourceCommand.scala:46)

at org.apache.spark.sql.execution.command.ExecutedCommandExec.sideEffectResult$lzycompute(commands.scala:70)

at org.apache.spark.sql.execution.command.ExecutedCommandExec.sideEffectResult(commands.scala:68)

at org.apache.spark.sql.execution.command.ExecutedCommandExec.doExecute(commands.scala:90)

at org.apache.spark.sql.execution.SparkPlan.$anonfun$execute$1(SparkPlan.scala:180)

at org.apache.spark.sql.execution.SparkPlan.$anonfun$executeQuery$1(SparkPlan.scala:218)

at org.apache.spark.rdd.RDDOperationScope$.withScope(RDDOperationScope.scala:151)

at org.apache.spark.sql.execution.SparkPlan.executeQuery(SparkPlan.scala:215)

at org.apache.spark.sql.execution.SparkPlan.execute(SparkPlan.scala:176)

at org.apache.spark.sql.execution.QueryExecution.toRdd$lzycompute(QueryExecution.scala:132)

at org.apache.spark.sql.execution.QueryExecution.toRdd(QueryExecution.scala:131)

at org.apache.spark.sql.DataFrameWriter.$anonfun$runCommand$1(DataFrameWriter.scala:989)

at org.apache.spark.sql.execution.SQLExecution$.$anonfun$withNewExecutionId$5(SQLExecution.scala:103)

at org.apache.spark.sql.execution.SQLExecution$.withSQLConfPropagated(SQLExecution.scala:163)

at org.apache.spark.sql.execution.SQLExecution$.$anonfun$withNewExecutionId$1(SQLExecution.scala:90)

at org.apache.spark.sql.SparkSession.withActive(SparkSession.scala:772)

at org.apache.spark.sql.execution.SQLExecution$.withNewExecutionId(SQLExecution.scala:64)

at org.apache.spark.sql.DataFrameWriter.runCommand(DataFrameWriter.scala:989)

at org.apache.spark.sql.DataFrameWriter.saveToV1Source(DataFrameWriter.scala:438)

at org.apache.spark.sql.DataFrameWriter.saveInternal(DataFrameWriter.scala:415)

at org.apache.spark.sql.DataFrameWriter.save(DataFrameWriter.scala:301)

at cn.tongdun.sparkdatahandler.HiveDemo.main(HiveDemo.java:46)

at java.base/jdk.internal.reflect.NativeMethodAccessorImpl.invoke0(Native Method)

at java.base/jdk.internal.reflect.NativeMethodAccessorImpl.invoke(Unknown Source)

at java.base/jdk.internal.reflect.DelegatingMethodAccessorImpl.invoke(Unknown Source)

at java.base/java.lang.reflect.Method.invoke(Unknown Source)

at org.apache.spark.deploy.JavaMainApplication.start(SparkApplication.scala:52)

at org.apache.spark.deploy.SparkSubmit.org$apache$spark$deploy$SparkSubmit$$runMain(SparkSubmit.scala:951)

at org.apache.spark.deploy.SparkSubmit.doRunMain$1(SparkSubmit.scala:180)

at org.apache.spark.deploy.SparkSubmit.submit(SparkSubmit.scala:203)

at org.apache.spark.deploy.SparkSubmit.doSubmit(SparkSubmit.scala:90)

at org.apache.spark.deploy.SparkSubmit$$anon$2.doSubmit(SparkSubmit.scala:1030)

at org.apache.spark.deploy.SparkSubmit$.main(SparkSubmit.scala:1039)

at org.apache.spark.deploy.SparkSubmit.main(SparkSubmit.scala)

Caused by: org.apache.hive.service.cli.HiveSQLException: Error while compiling statement: FAILED: ParseException line 1:37 cannot recognize input near '.' 'id1' 'BIGINT' in column type

at org.apache.hive.service.cli.operation.Operation.toSQLException(Operation.java:380)

at org.apache.hive.service.cli.operation.SQLOperation.prepare(SQLOperation.java:206)

at org.apache.hive.service.cli.operation.SQLOperation.runInternal(SQLOperation.java:290)

at org.apache.hive.service.cli.operation.Operation.run(Operation.java:320)

at org.apache.hive.service.cli.session.HiveSessionImpl.executeStatementInternal(HiveSessionImpl.java:530)

at org.apache.hive.service.cli.session.HiveSessionImpl.executeStatementAsync(HiveSessionImpl.java:517)

at org.apache.hive.service.cli.CLIService.executeStatementAsync(CLIService.java:310)

at org.apache.hive.service.cli.thrift.ThriftCLIService.ExecuteStatement(ThriftCLIService.java:530)

at org.apache.hive.service.rpc.thrift.TCLIService$Processor$ExecuteStatement.getResult(TCLIService.java:1437)

at org.apache.hive.service.rpc.thrift.TCLIService$Processor$ExecuteStatement.getResult(TCLIService.java:1422)

at org.apache.thrift.ProcessFunction.process(ProcessFunction.java:39)

at org.apache.thrift.TBaseProcessor.process(TBaseProcessor.java:39)

at org.apache.hive.service.auth.TSetIpAddressProcessor.process(TSetIpAddressProcessor.java:56)

at org.apache.thrift.server.TThreadPoolServer$WorkerProcess.run(TThreadPoolServer.java:286)

at java.util.concurrent.ThreadPoolExecutor.runWorker(ThreadPoolExecutor.java:1142)

at java.util.concurrent.ThreadPoolExecutor$Worker.run(ThreadPoolExecutor.java:617)

at java.lang.Thread.run(Thread.java:748)

Caused by: java.lang.RuntimeException: org.apache.hadoop.hive.ql.parse.ParseException:line 1:37 cannot recognize input near '.' 'id1' 'BIGINT' in column type

at org.apache.hadoop.hive.ql.parse.ParseDriver.parse(ParseDriver.java:211)

at org.apache.hadoop.hive.ql.parse.ParseUtils.parse(ParseUtils.java:77)

at org.apache.hadoop.hive.ql.parse.ParseUtils.parse(ParseUtils.java:70)

at org.apache.hadoop.hive.ql.Driver.compile(Driver.java:468)

at org.apache.hadoop.hive.ql.Driver.compileInternal(Driver.java:1317)

at org.apache.hadoop.hive.ql.Driver.compileAndRespond(Driver.java:1295)

at org.apache.hive.service.cli.operation.SQLOperation.prepare(SQLOperation.java:204)

... 15 more

Affects Version(s)

master

Kyuubi Server Log Output

No response

Kyuubi Engine Log Output

No response

Kyuubi Server Configurations

No response

Kyuubi Engine Configurations

No response

Additional context

No response

Are you willing to submit PR?

- Yes. I would be willing to submit a PR with guidance from the Kyuubi community to fix.

- No. I cannot submit a PR at this time.

Solution 1

The issue isn’t actually a syntax error, the Hive ParseException is just caused by a reserved keyword in Hive (in this case, end).

The solution: use backticks around the offending column name:

CREATE EXTERNAL TABLE moveProjects (cid string, `end` string, category string)

STORED BY 'org.apache.hadoop.hive.dynamodb.DynamoDBStorageHandler'

TBLPROPERTIES ("dynamodb.table.name" = "Projects",

"dynamodb.column.mapping" = "cid:cid,end:end,category:category");

With the added backticks around end, the query works as expected.

Reserved words in Amazon Hive (as of February 2013):

IF, HAVING, WHERE, SELECT, UNIQUEJOIN, JOIN, ON, TRANSFORM, MAP, REDUCE, TABLESAMPLE, CAST, FUNCTION, EXTENDED, CASE, WHEN, THEN, ELSE, END, DATABASE, CROSS

Source: This Hive ticket from the Facebook Phabricator tracker

Solution 2

You can always escape the reserved keyword if you still want to make your query work!!

Just replace end with `end`

Here is the list of reserved keywords

https://cwiki.apache.org/confluence/display/Hive/LanguageManual+DDL

CREATE EXTERNAL TABLE moveProjects (cid string, `end` string, category string)

STORED BY 'org.apache.hadoop.hive.dynamodb.DynamoDBStorageHandler'

TBLPROPERTIES ("dynamodb.table.name" = "Projects",

"dynamodb.column.mapping" = "cid:cid,end:end,category:category");

Solution 3

I was using /Date=20161003 in the folder path while doing an insert overwrite and it was failing. I changed it to /Dt=20161003 and it worked

Related videos on Youtube

08 : 23

How to Unlock 100% LHR in win and HiveOS — nbminer More Stable

04 : 08

How to load Hive table into PySpark?

06 : 23

NBMiner 100% LHR Unlock HiveOS

16 : 45

NB Miner 41.0 Unlock LHR Card 💯 In HIVEOS — How To Get Full Megahash Step By Step

07 : 00

Using Spark and Hive — PART 1: Spark as ETL tool

03 : 22

How to Fix GPU Driver Error, No Temps on HiveOS

07 : 06

Cloudera Administration — Troubleshooting Hive Issues

03 : 48

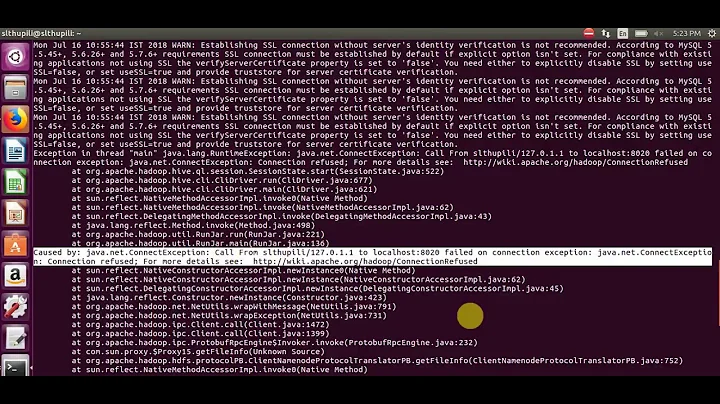

Connection refused error when running HIVE in Hadoop EcoSystem

06 : 46

01 : 06 : 11

Big Data Certifications Workshop 025 — Hive Query Language Contd… — Aggregations

01 : 38

How do I resolve the error “FAILED: ParseException line 1:X missing EOF” in Amazon Athena?

21 : 45

Hướng dẫn cài đặt và sử dụng hệ điều hành HIVEOS cho mining,How to install and use HIVEOS for mining

04 : 05

How to Fix Connection to API server failed on HiveOS

Comments

-

I am getting the following error when trying to create a Hive table from an existing DynamoDB table:

NoViableAltException(88@[]) at org.apache.hadoop.hive.ql.parse.HiveParser_IdentifiersParser.identifier(HiveParser_IdentifiersParser.java:9123) at org.apache.hadoop.hive.ql.parse.HiveParser.identifier(HiveParser.java:30750) ...more stack trace... FAILED: ParseException line 1:77 cannot recognize input near 'end' 'string' ',' in column specificationThe query looks like this (simplified to protect the innocent):

CREATE EXTERNAL TABLE moveProjects (cid string, end string, category string) STORED BY 'org.apache.hadoop.hive.dynamodb.DynamoDBStorageHandler' TBLPROPERTIES ("dynamodb.table.name" = "Projects", "dynamodb.column.mapping" = "cid:cid,end:end,category:category");Basically, I am trying to create a Hive table containing the contents of the

ProjectsDynamoDB table, but the create statement is throwing a parse error from Hive / Hadoop. -

Good observation. Thank you.

-

I meet similar issue when using column _1. change to another name solves the problem. Thanks for the answer.

-

Do you know how we can partition on /20161003 ?

-

«div» was another keyword. Replaced with backticks and started working.. !! Error Message: FAILED: ParseException line 52:16 cannot recognize input near ‘div’ ‘STRING’ ‘,’ in column specification